Image Analysis Agent

This guide demonstrates how to create an Image Analysis Agent that can analyze images and provide descriptions using the chat protocol. The agent is compatible with the Agentverse Chat Interface and can process images to provide detailed analysis.

Overview

In this example, you'll learn how to build a uAgent that can:

- Accept images through the chat protocol

- Analyze images using GPT-4 Vision

- Provide detailed descriptions and analysis

- Handle various image formats and sizes

For a basic understanding of how to set up an ASI:One compatible agent, please refer to the ASI:One Compatible Agents guide first.

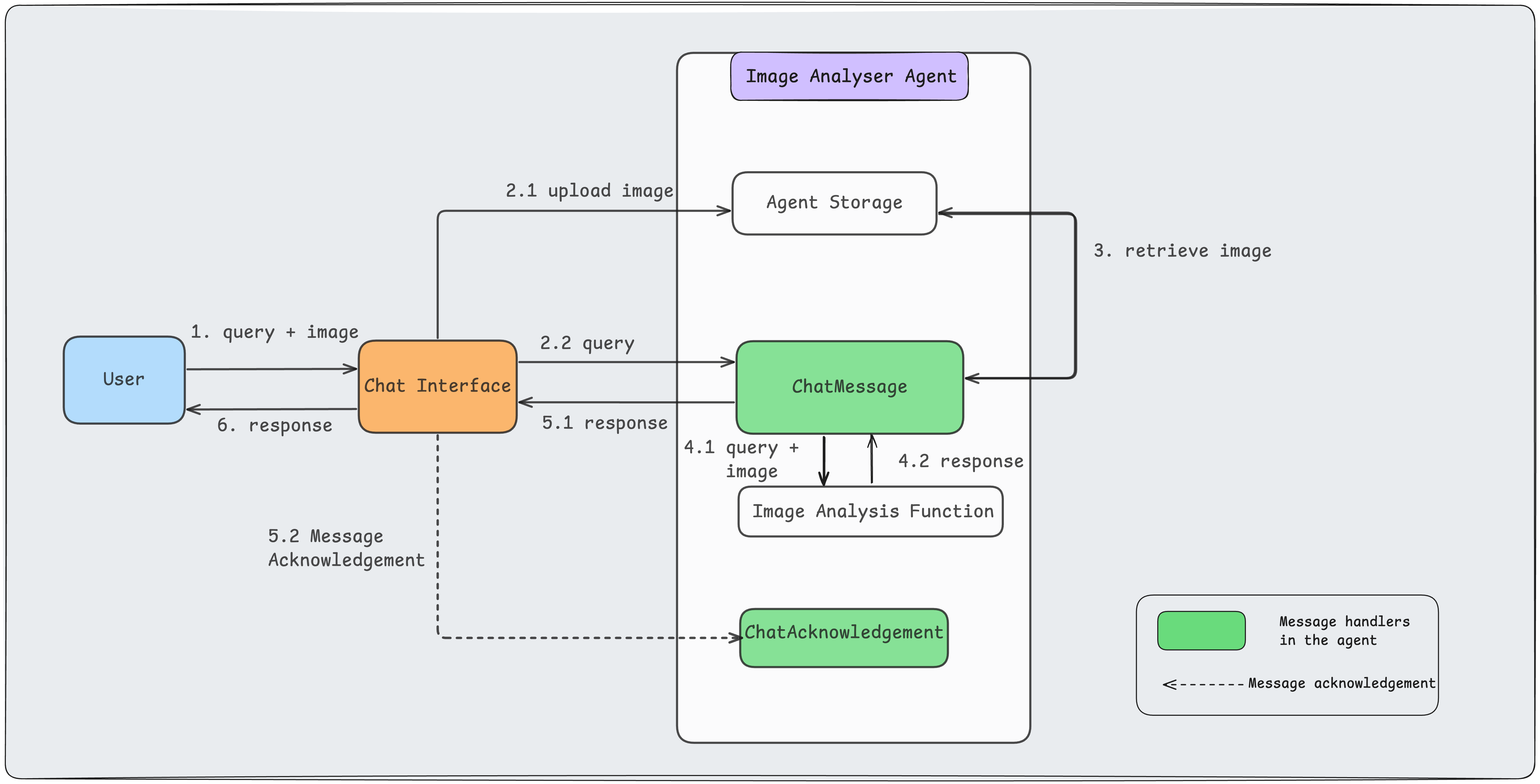

Message Flow

The communication between the User, Chat Interface, and Image Analyser Agent proceeds as follows:

-

User Query

- The user submits a query along with an image through the Chat Interface.

-

Image Upload & Query Forwarding

- 2.1: The Chat Interface uploads the image to the Agent Storage.

- 2.2: The Chat Interface forwards the user's query with a reference to the uploaded image to the Image Analyser Agent as a

ChatMessage.

-

Image Retrieval

- The Image Analyser Agent retrieves the image from Agent Storage using the provided reference.

-

Image Analysis

- 4.1: The agent passes the query and image to the Image Analysis Function.

- 4.2: The Image Analysis Function processes the image and returns a response.

-

Response & Acknowledgement

- 5.1: The agent sends the analysis result back to the Chat Interface as a

ChatMessage. - 5.2: The agent also sends a

ChatAcknowledgementto confirm receipt and processing of the message.

- 5.1: The agent sends the analysis result back to the Chat Interface as a

-

User Receives Response

- The Chat Interface delivers the analysis result to the user.

Implementation

In this example we will create an agent and its associated files on Agentverse that communicate using the chat protocol with the Chat Interface Refer to the Hosted Agents section to understand the detailed steps for agent creation on Agentverse.

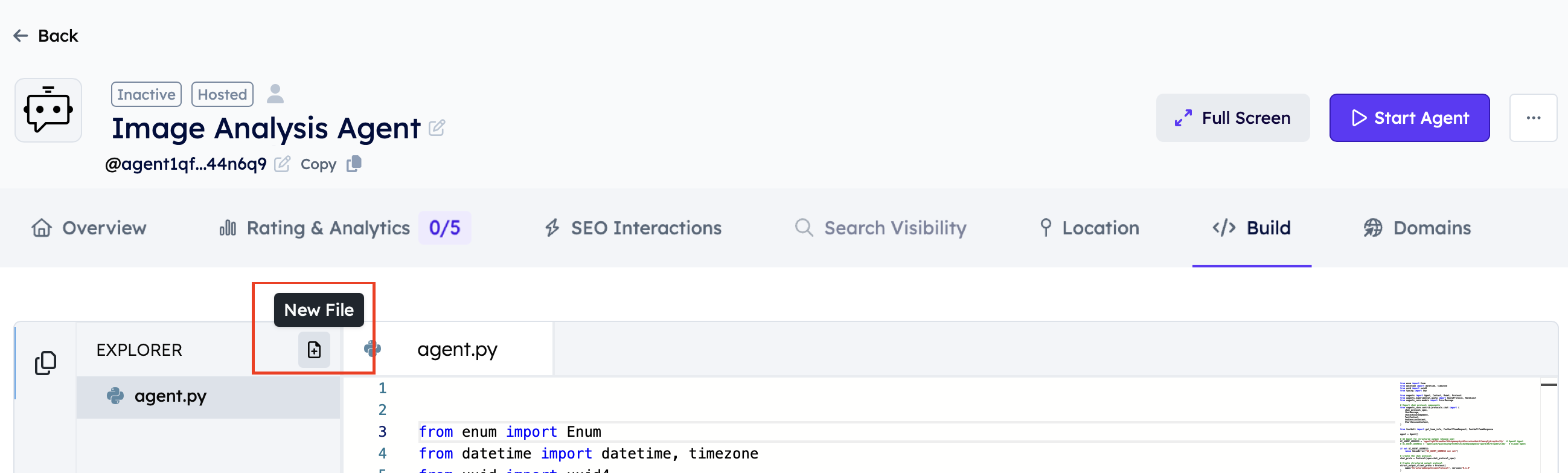

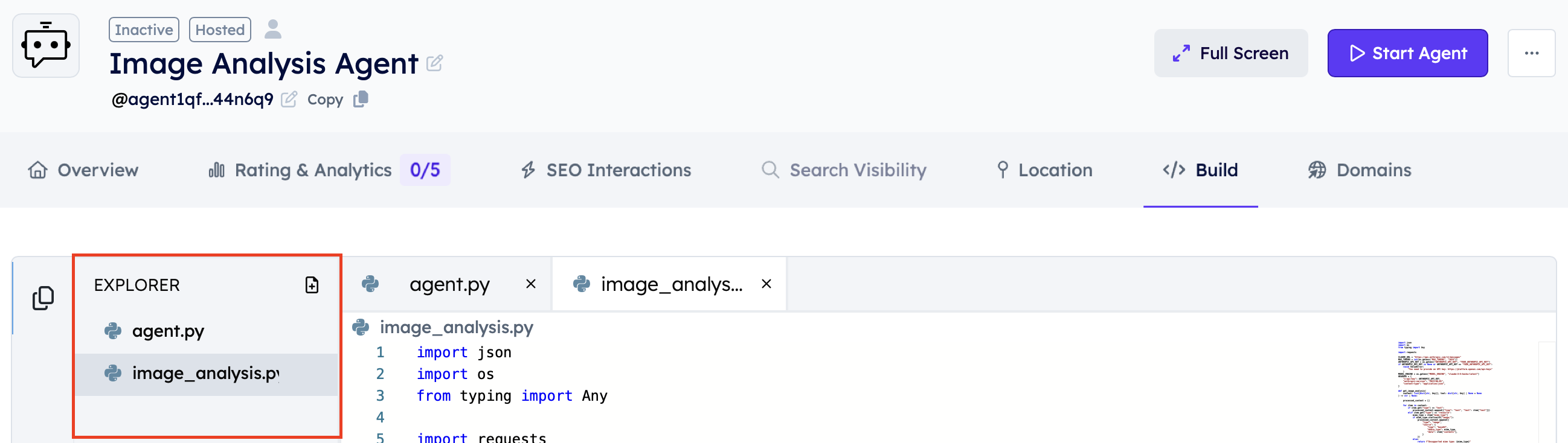

Create a new agent named "Image Analysis Agent" on Agentverse and create the following files:

agent.py # Main agent file with integrated chat protocol and message handlers for ChatMessage and ChatAcknowledgement

image_analysis.py # Image analysis function

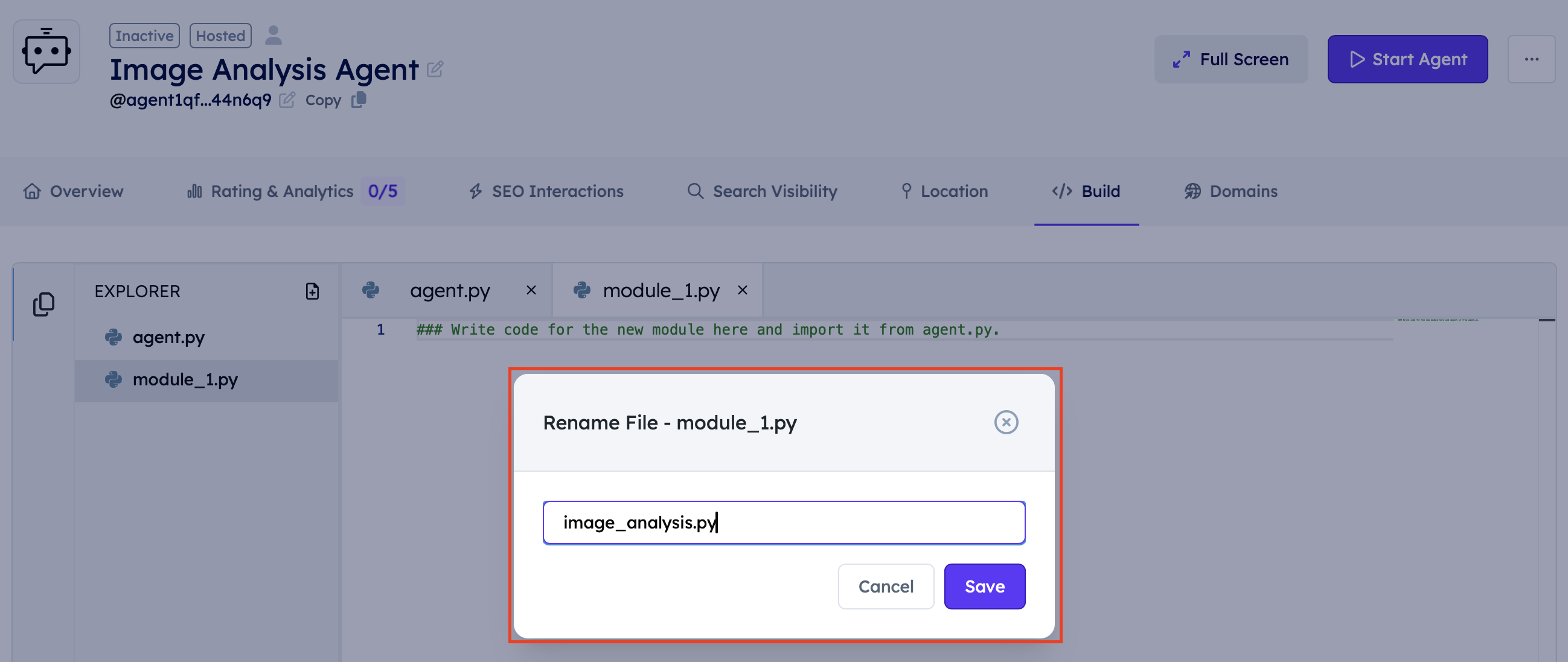

To create a new file on Agentverse:

-

Click on the New File icon

-

Assign a name to the File

-

Directory Structure

1. Image Analysis Implementation

The image_analysis.py file implements the logic for passing both text and image inputs to Claude's vision model. It handles encoding images, constructing the appropriate request, and returning the AI-generated analysis of the image and query.

import json

import os

from typing import Any

import requests

CLAUDE_URL = "https://api.anthropic.com/v1/messages"

MAX_TOKENS = int(os.getenv("MAX_TOKENS", "1024"))

ANTHROPIC_API_KEY = os.getenv("ANTHROPIC_API_KEY", "YOUR_ANTHROPIC_API_KEY")

if ANTHROPIC_API_KEY is None or ANTHROPIC_API_KEY == "YOUR_ANTHROPIC_API_KEY":

raise ValueError(

"You need to provide an API key: https://platform.openai.com/api-keys"

)

MODEL_ENGINE = os.getenv("MODEL_ENGINE", "claude-3-5-haiku-latest")

HEADERS = {

"x-api-key": ANTHROPIC_API_KEY,

"anthropic-version": "2023-06-01",

"content-type": "application/json",

}

def get_image_analysis(

content: list[dict[str, Any]], tool: dict[str, Any] | None = None

) -> str | None:

processed_content = []

for item in content:

if item.get("type") == "text":

processed_content.append({"type": "text", "text": item["text"]})

elif item.get("type") == "resource":

mime_type = item["mime_type"]

if mime_type.startswith("image/"):

processed_content.append({

"type": "image",

"source": {

"type": "base64",

"media_type": mime_type,

"data": item["contents"],

}

})

else:

return f"Unsupported mime type: {mime_type}"

data = {

"model": MODEL_ENGINE,

"max_tokens": MAX_TOKENS,

"messages": [

{

"role": "user",

"content": processed_content,

}

],

}

if tool:

data["tools"] = [tool]

data["tool_choice"] = {"type": "tool", "name": tool["name"]}

try:

response = requests.post(

CLAUDE_URL, headers=HEADERS, data=json.dumps(data), timeout=120

)

response.raise_for_status()

except requests.exceptions.Timeout:

return "The request timed out. Please try again."

except requests.exceptions.RequestException as e:

return f"An error occurred: {e}"

# Check if the response was successful

response_data = response.json()

# Handle error responses

if "error" in response_data:

return f"API Error: {response_data['error'].get('message', 'Unknown error')}"

if tool:

for item in response_data["content"]:

if item["type"] == "tool_use":

return item["input"]

messages = response_data["content"]

if messages:

return messages[0]["text"]

else:

return None

2. Image Analysis Agent Setup

The agent.py file is the core of your application with integrated chat protocol functionality and contains message handlers for ChatMessage and ChatAcknowledgement protocols. Think of it as the main control center that:

- Handles message handlers for

ChatMessageandChatAcknowledgementprotocols - Processes images and provides analysis using integrated chat protocol

Here's the complete implementation with integrated chat protocol:

import os

from datetime import datetime, timezone

from uuid import uuid4

from uagents import Agent, Context, Protocol

from uagents_core.storage import ExternalStorage

# Import chat protocol components

from uagents_core.contrib.protocols.chat import (

chat_protocol_spec,

ChatMessage,

ChatAcknowledgement,

TextContent,

ResourceContent,

StartSessionContent,

MetadataContent,

)

from image_analysis import get_image_analysis

agent = Agent()

# Storage configuration

STORAGE_URL = os.getenv("AGENTVERSE_URL", "https://agentverse.ai") + "/v1/storage"

# Create the chat protocol

chat_proto = Protocol(spec=chat_protocol_spec)

def create_text_chat(text: str) -> ChatMessage:

return ChatMessage(

timestamp=datetime.now(timezone.utc),

msg_id=uuid4(),

content=[TextContent(type="text", text=text)],

)

def create_metadata(metadata: dict[str, str]) -> ChatMessage:

return ChatMessage(

timestamp=datetime.now(timezone.utc),

msg_id=uuid4(),

content=[MetadataContent(

type="metadata",

metadata=metadata,

)],

)

# Chat protocol message handler

@chat_proto.on_message(ChatMessage)

async def handle_message(ctx: Context, sender: str, msg: ChatMessage):

ctx.logger.info(f"Got a message from {sender}")

# Send acknowledgement

await ctx.send(

sender,

ChatAcknowledgement(

acknowledged_msg_id=msg.msg_id,

timestamp=datetime.now(timezone.utc)

),

)

prompt_content = []

for item in msg.content:

if isinstance(item, StartSessionContent):

ctx.logger.info(f"Got a start session message from {sender}")

# Signal that attachments are supported

await ctx.send(sender, create_metadata({"attachments": "true"}))

elif isinstance(item, TextContent):

ctx.logger.info(f"Got text content from {sender}: {item.text}")

prompt_content.append({"text": item.text, "type": "text"})

elif isinstance(item, ResourceContent):

ctx.logger.info(f"Got resource content from {sender}")

try:

external_storage = ExternalStorage(

identity=ctx.agent.identity,

storage_url=STORAGE_URL,

)

data = external_storage.download(str(item.resource_id))

prompt_content.append({

"type": "resource",

"mime_type": data["mime_type"],

"contents": data["contents"],

})

except Exception as ex:

ctx.logger.error(f"Failed to download resource: {ex}")

await ctx.send(sender, create_text_chat("Failed to download resource."))

return

else:

ctx.logger.warning(f"Got unexpected content from {sender}")

# Process the content if available

if prompt_content:

try:

response = get_image_analysis(prompt_content)

await ctx.send(sender, create_text_chat(response))

except Exception as err:

ctx.logger.error(f"Error processing image analysis: {err}")

await ctx.send(sender, create_text_chat("Sorry, I couldn't analyze the image. Please try again later."))

# Chat protocol acknowledgement handler

@chat_proto.on_message(ChatAcknowledgement)

async def handle_ack(ctx: Context, sender: str, msg: ChatAcknowledgement):

ctx.logger.info(

f"Got an acknowledgement from {sender} for {msg.acknowledged_msg_id}"

)

# Register protocols

agent.include(chat_proto, publish_manifest=True)

if __name__ == "__main__":

agent.run()

Key Features:

-

Integrated Architecture: All chat protocol functionality is contained in the main agent file for simplicity.

-

Image Processing: Supports both text and image inputs, downloading images from agent storage and processing them with Claude's vision model.

-

Robust Error Handling: Includes comprehensive error handling for both storage operations and image analysis.

-

Session Management: Properly tracks chat sessions and handles session initiation with attachment support.

-

Storage Integration: Uses ExternalStorage to securely download and process uploaded images.

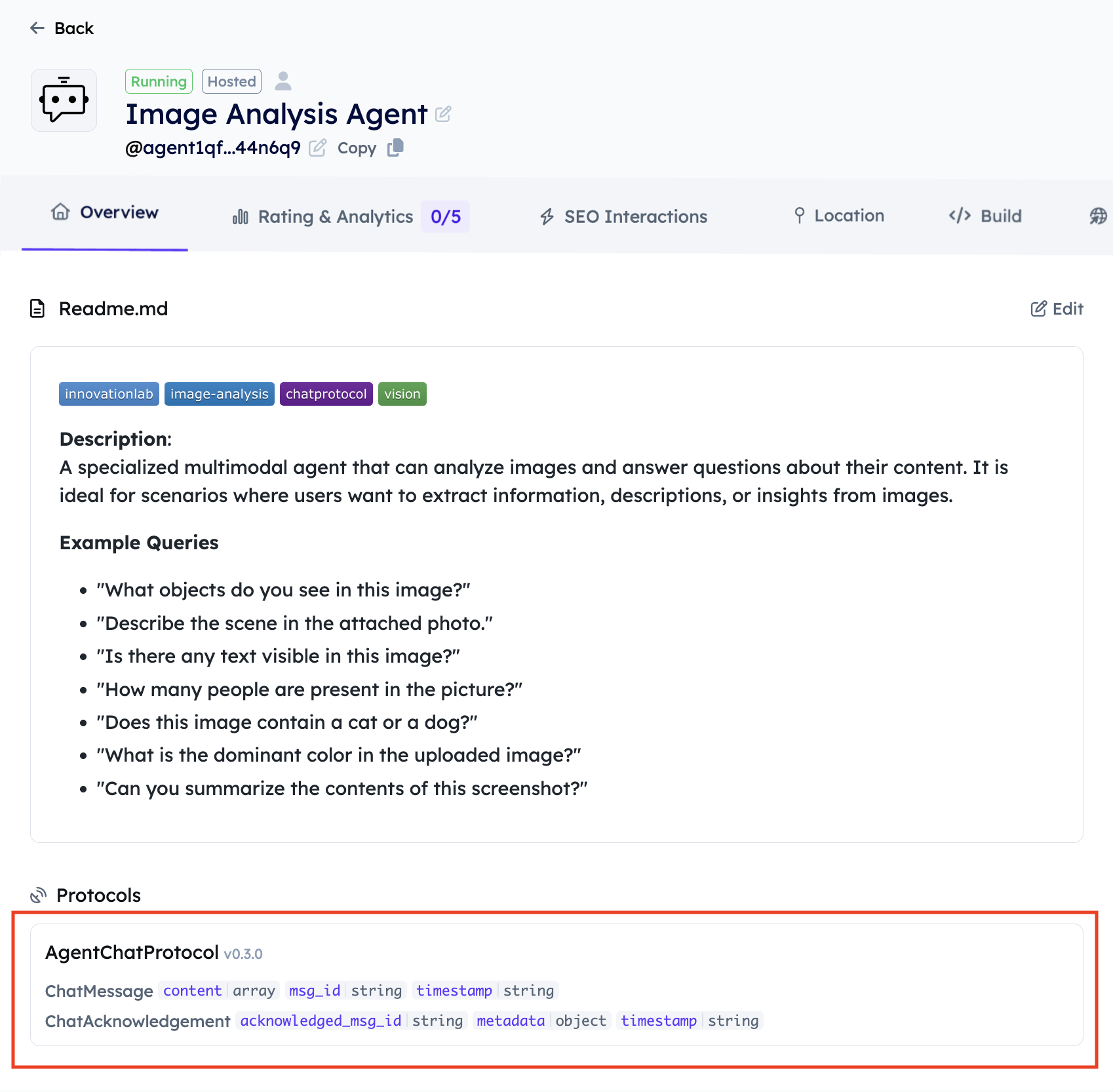

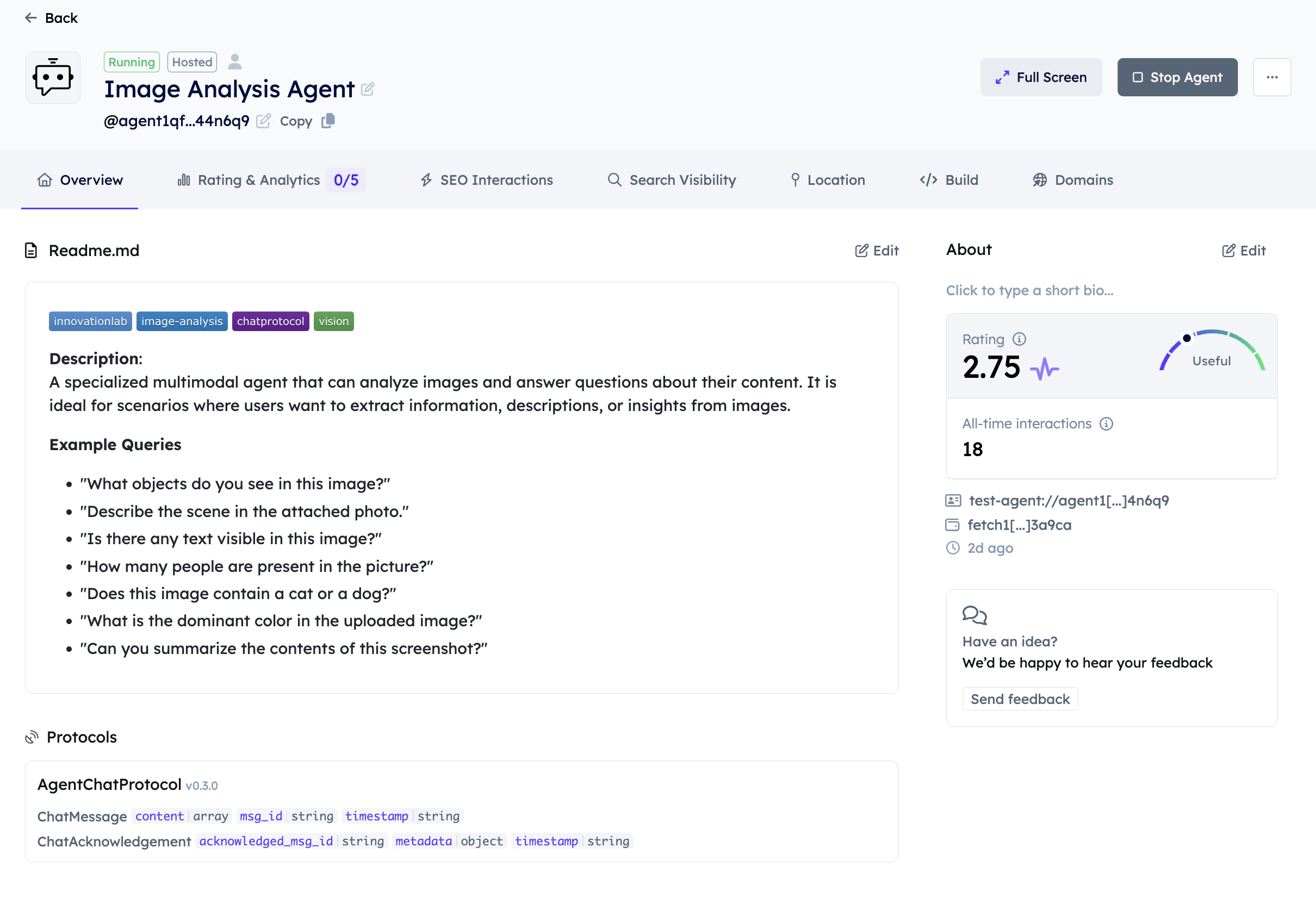

Adding a README to your Agent

-

Go to the Overview section in the Editor.

-

Click on Edit and add a good description for your Agent so that it can be easily searchable by the ASI1 LLM. Please refer the Importance of Good Readme section for more details.

-

Make sure the Agent has the right

AgentChatProtocol.

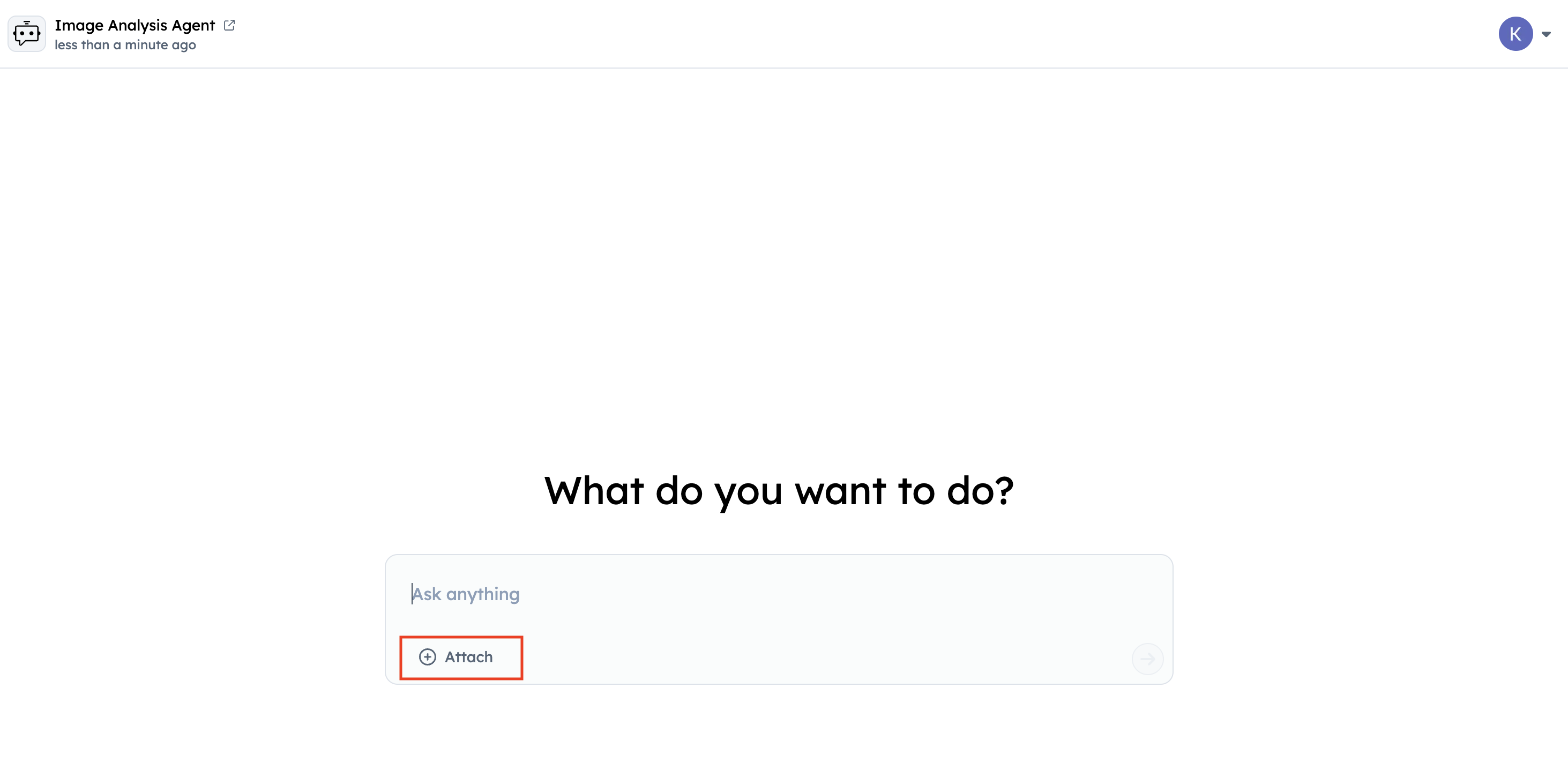

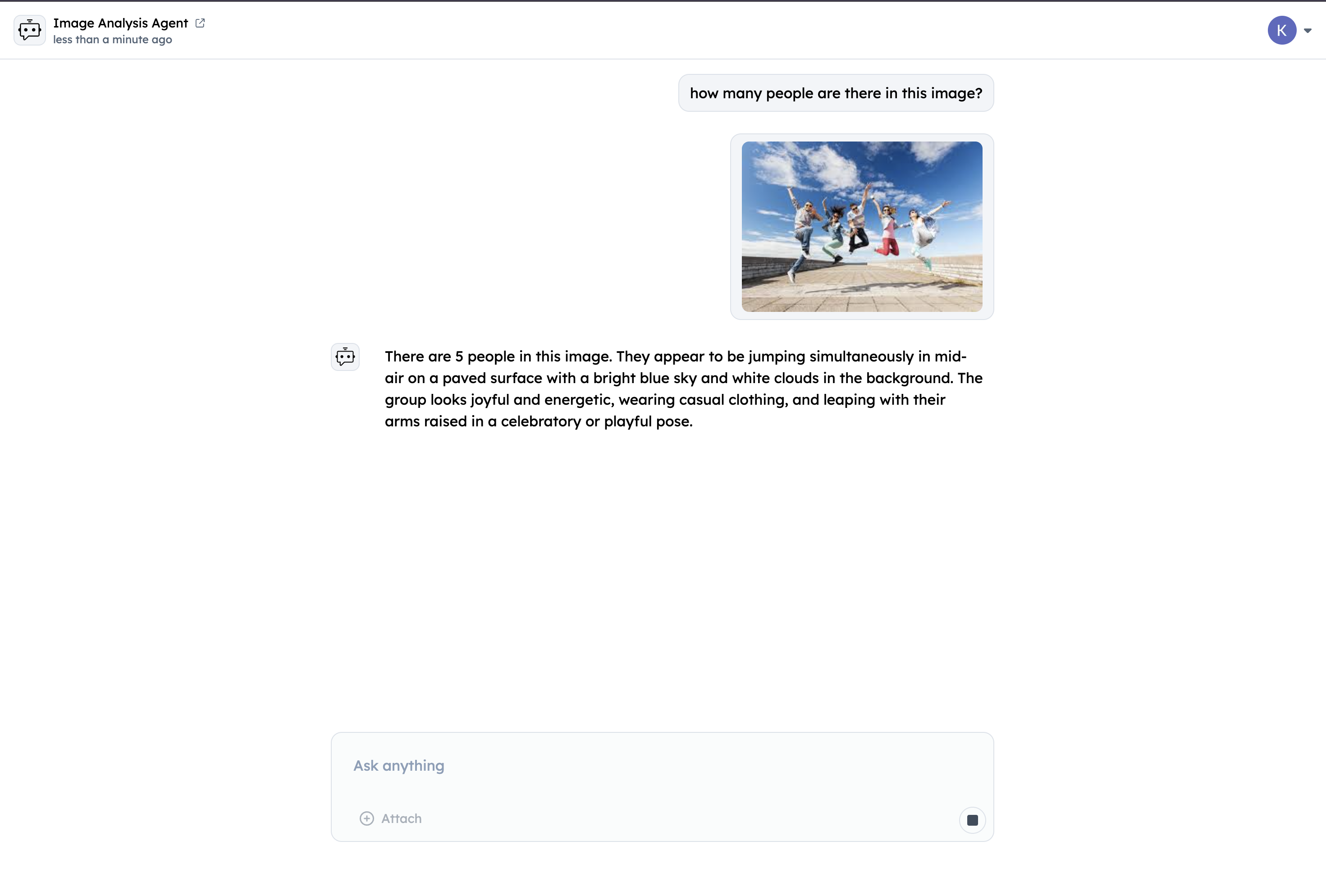

Query your Agent

-

Start your Agent

-

Navigate to the Overview tab and click on Chat with Agent to interact with the agent from the Agentverse Chat Interface.

- Click on the Attach button to upload the image and type in your query for instance 'How many people are present in the image?'

Note: Currently, the image upload feature for agents is supported via the Agentverse Chat Interface. Support for image uploads through ASI:One will be available soon.